A Poisoned Apple

Think different, but not too different… A company full of PR specialists and an all-star marketing team is fumbling its child pornography prevention effort very, very badly.

It’s all about intentions for Apple

It is hard to fathom how “child pornography is bad” can be turned into a losing argument. After all, everyone who self-identify anywhere along the entire political spectrum will fervently support this statement in unison, and people even seem to broadly agree on the approaches that can be used to curb their spread.

Yet Apple, the world’s most valuable privately-held company, the world’s most profitable cell-phone manufacturer, and the company that almost bought off Reese Witherspoon’s entire catalogue, found itself in hot water after announcing it will take actions to proactively stop child pornography… by matching photos in people’s iCloud accounts with a centralized database.

At this point, your eyebrows should be raised. NeuralHash, the AI technology Apple plans to utilize and spot child sexual abuse materials (abbreviated as CSAM) is, according to Apple’s own words, not invasive at all and will not compromise the privacy of your own pictures.

I don’t blame you if you’re not convinced, especially since Apple has suffered from insufficiencies with iCloud security before. (It is surprising that Jennifer Lawrence has yet to come out vocally against NeuralHash yet because she’s arguably the greatest victim of the 2014 breach!) But Apple is not entirely bullshitting here–there are established and extremely mature encrypted CSAM databases from child protection organizations such as the NCMEC that can credibly tell how some images are genuinely CSAMs.

Losing the War on Rhetorics

This brings us to Apple’s most surprising failure here: its inability to convince people to support something that’s supposedly good.

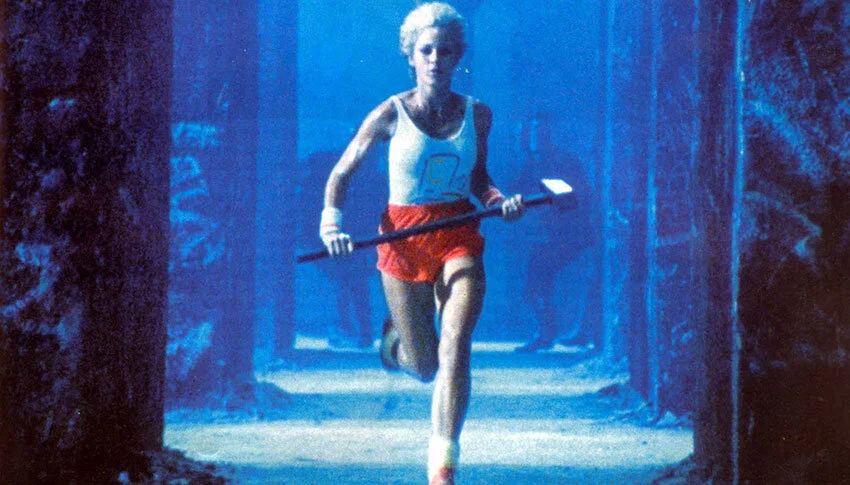

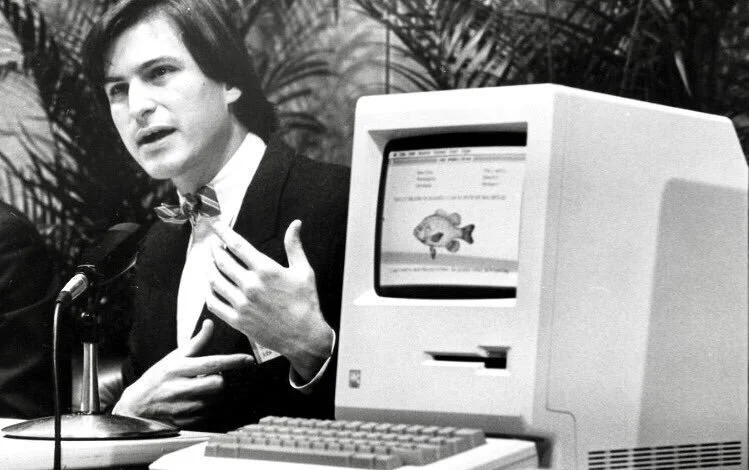

Apple as a company provides all the materials you will ever need to teach a masterclass on marketing. The ‘1984’ ad, the ‘Think Different’ slogan, the silhouette iPod commercials, the ‘MacBook Air easily fits into a paper bag’ stunt… apart from genuine engineering failures such as the notorious AirPower and iPhone 4’s antenna, this is a company that simply doesn’t miss. But Apple’s efforts in promoting NeuralHash as a way to fight CSAM is spiraling into a mini-crisis and can certainly dampen long-term consumer loyalty to Apple products.

We don’t need to rewind too much, but it’s good to remember how several years ago, when faced with intensifying competition from Android devices, Apple started pushing privacy as its first and foremost selling point. Apple came out with an impressive series of ads promoting its uniquely robust security system that can only be found in a closed ecosystem like iCloud.

They didn’t stop there either. Apple has a dedicated privacy page, on which it declares privacy is a “fundamental human right.” I can’t help but agree with them here: in the age of increasingly complicated digital surveillance, guarantee of privacy is something everyone should be entitled to. It’s just that by doubling down this message as what sets them apart from other big tech companies, and then suggesting they want to detect if you possess CSAMs in your devices, is pretty much shooting themselves in the foot.

The Avalanche

We don’t know how much and how far this news will eventually reverberate. But Apple’s actions so far shows one thing: they know they have, at the very least, miscommunicated–and they’re doing as much damage control as possible. Craig Federighi, Apple’s in-house hair icon, was interviewed by Wall Street Journal’s tech journalist Joanna Stern recently. He doubled down on how NeuralHash is actually a brilliant way to avoid surveillance of user’s photos, and indicated that if users are truly bothered, they can switch off their iCloud Photo Library.

By now, though, it’s got me asking: if this were true, doesn’t this defeat the whole purpose of detecting CSAM? Thanks to Craig, it is now public knowledge that to make sure your disturbing images stay private forever, use an iOS device, turn off iCloud, and only send them to a trusted person via encrypted messaging apps such as Signal or Telegram. Ta-dah, no one will ever get you, maybe not even the FBI.

Asuhariet Ygvar, who discovered that Apple actually sneaked CSAM codes into iOS 14.3, successfully reverse-engineered NeuralHash, wrote it all into a Python script, and kindly shared it on GitHub. While this TechCrunch article is better at analyzing the technical nitty gritty of Ygvar’s codes, what you need to know is NeuralHash seems to be a recipe to create a “Hash Collision”, which will be a disaster for any system that does a lot of encryption. Guess what? iOS is just that.

It’s all too humiliating for Apple because how pompously they have been pro-privacy in the past, as I mentioned in the last section. In fact, data privacy is something so integral to everyday users’ experience that China, not exactly known for guaranteeing its citizens privacy, passed one of the strictest data privacy bills in the world recently. Apple essentially walked into a trap it created for itself, without any belated self-awareness, because all of their promotional materials that explicitly states “what happens on your iPhone stays on your iPhone” are still floating around the internet.

My Take?

Okay, hear me out: it’s not easy for me to stay objective since I am fully embedded in the Apple ecosystem. I even own a full-sized HomePod, knowing how credulously stupid Siri is. But unlike the BatteryGate and AntennaGate where Apple really lied into our faces, I think NeuralHash is really meant to be a solution around surveillance of private devices. Watching Craig’s video in full, and breaking down the points that he (hurriedly and nervously) made, the technology sounds very sound; all it does is matching sparse pixels to spot if there are any coincidental matches with items in the CSAM database.

But I don’t want to defend Apple’s decision to proceed either. I haven’t noticed a huge slide in Apple’s market value, but I do think enough AI and surveillance tech experts have taken a closer look and deemed this thing not ready for prime time. The term “hash collision” sounds bad enough that you don’t need to be a computer expert to be alarmed. I honestly think it is in Apple’s best interested to delay the launch of NeuralHash, not just because there may be ways in which the technology malfunction, but also because Apple itself, with the help of Craig’s “clarification”, accidentally mentioned a way to evade it.

Some afterthoughts

MacRumors, one of the largest Apple rumor site, recently conducted a poll to find out if CSAM detection and NeuralHash will change people’s willingness to stay in the Apple ecosystem. This poll is not scientific, of course, and the users of this website overwhelmingly skew fervent Apple users. Though most people don’t seem like they’re in a rush to run away from Apple, only 47% of users have zero concern over CSAM; the verdict is enough of a no-confidence vote that I think Tim Cook should think twice before rushing to approve CSAM detection.

I am a simple man, and I believe any technology that’s raising concerns from average users alike to industry experts should undergo scrutiny and be rolled out in sequences, just to see if they are as functional as initially claimed. Many parents, for instance, are rightfully worried that images of their child bathing or innocently running around the yard will be misidentified as CSAM materials and subsequently get them in trouble. We won’t know how much of their concerns are valid until NeuralHash is operating out in the open–but in the meantime, we all should sit tight.